- How the IEEE 754 standard fits in with NVIDIA GPUs

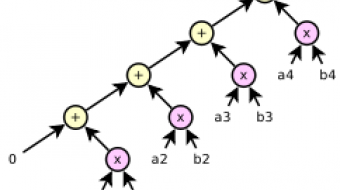

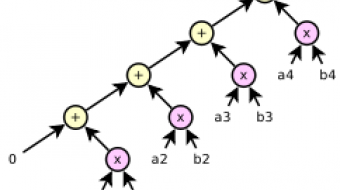

- How fused multiply-add improves accuracy

- There's more than one way to compute a dot product (we present three)

- How to make sense of different numerical results between CPU and GPU

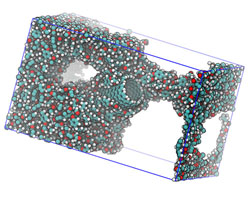

Graphic Processor Units for Many-particle Dynamics

.

© 2009-2025 by GPIUTMD

We have 50 guests and no members online

NVIDIA is calling on global researchers to submit their innovations for the NVIDIA Global Impact Award - an annual grant of $150,000 for groundbreaking work that addresses the world's most important social and humanitarian problems.

Read more ...With CUDA 6, we’re introducing one of the most dramatic programming model improvements in the history of the CUDA platform, Unified Memory. In a typical PC or cluster node today, the memories of the CPU and GPU are physically distinct and separated by the PCI-Express bus. Before CUDA 6, that is exactly how the programmer has to view things. Data that is shared between the CPU and GPU must be allocated in both memories, and explicitly copied between them by the program. This adds a lot of complexity to CUDA programs.

Read more ...© 2009-2015 by GPIUTMD

![]() Copyright © 2014. GPIUTMD - Graphic Processor Units for Many-particle Dynamics

Copyright © 2014. GPIUTMD - Graphic Processor Units for Many-particle Dynamics

Isfahan University of Technology

Address: Esteghlal Sq., Isfahan, Iran

Postal Code: 8415683111

Phone: +98 311 391 3110

Fax: +98 311 391 2718