Simulations of Earth and space phenomena at NASA’s Goddard Space Flight Center are getting a boost from graphics processing units (GPUs). Early results on small laboratory GPU systems and a new GPU cluster at the NASA Center for Climate Simulation (NCCS) demonstrate potential for significant speedups versus conventional computer processors.

In personal computers, gaming devices, and mobile phones, GPUs specialize in fast rendering and manipulation of graphics. In some supercomputers, GPUs are playing a new role as accelerators that can take over many calculations from conventional central processing units (CPUs). With performance increases of 20x or more in certain cases, the supercomputing community has huge interest in GPUs for simulation and data analysis. Tellingly, the current number one and three of the top five supercomputers in the world include GPUs.

At Goddard, scientists and engineers have used systems ranging from one to four GPUs in laboratories up to a 64-GPU IBM iDataPlex cluster that NCCS made operational in May (see Figure 1 below). “We have deployed an application testing environment within our Discover supercomputing cluster,” said Dan Duffy, NCCS lead architect. “The GPUs have been deployed on nodes with the same system configuration as the rest of Discover. Therefore, users can easily port their application to the GPUs and perform scalability studies.”

Figure 1: In May, the NASA Center for Climate Simulation (NCCS) made operational an IBM iDataPlex cluster including 64 graphics processing units (GPUs). The GPU cluster fits inside the two cabinets at the end of the row. This newest part of the NCCS Discover supercomputer is capable of 36.4 trillion floating-point operations per second (teraflops) peak.

Working Component by Component

The largest consumer of NCCS resources is Goddard’s Global Modeling and Assimilation Office (GMAO). They are adapting their Goddard Earth Observing System Model, Version 5 (GEOS-5) atmospheric model so that it can run on any combination of GPUs and CPUs. “We want the code to look about the same for scientists who have been using it for years and produce correct results wherever it runs,” said Matt Thompson, GMAO senior scientific software engineer.

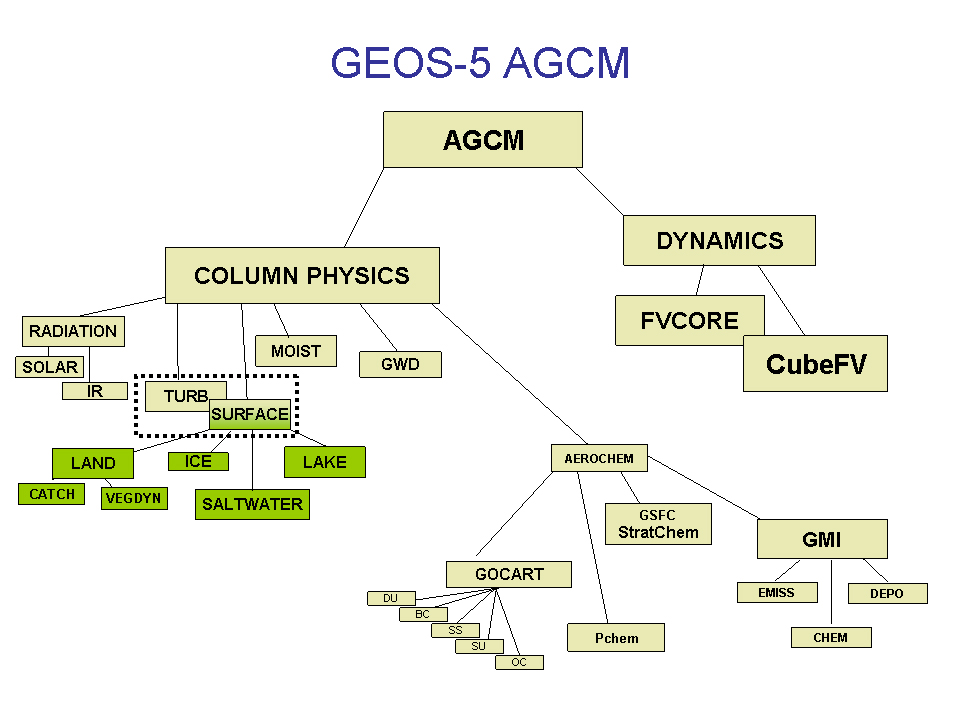

While dividing the atmosphere into a grid of boxes stacked 72 levels high, GEOS-5 combines two simulation techniques. Column physics solves equations inside the boxes. Dynamics moves the results across boxes—and around the globe. GEOS-5 has a hierarchy of physics and dynamics components focusing on specific phenomena (see Figure 2 below). GMAO programmers have been going through the model component by component and porting them to GPUs, which present distinct advantages and challenges.

Figure 2: The Goddard Earth Observing System Model, Version 5 (GEOS-5) atmospheric general circulation model (AGCM) consists of a hierarchy of components focusing on specific phenomena.

GPUs offer a large number of streaming processing cores for performing calculations. For instance, the NCCS cluster’s 64 NVIDIA Tesla M2070 GPUs have 28,672 total cores, nearly as many cores as the rest of the Discover supercomputer. However, since GPUs only perform simple arithmetic, they must be connected to conventional CPUs for executing complex applications. “A GPU does not see the outside world, and one frequently must copy data from CPU to GPU and back again,” explained Tom Clune, Advanced Software and Technology Group (ASTG) lead in the Software Integration and Visualization Office (SIVO).

To maximize a GPU’s efficiency a programmer has to explicitly manage the memory so that the data gets to the GPU core when it is ready to compute. As GMAO ports their GEOS-5 components, “the hope is to put the most computationally intensive kernels on the GPUs,” Thompson said. “If I get enough or most of the model on GPUs, we can start using strategies that help minimize that costly data transfer.”

In summer 2009, Thompson started with GEOS-5’s radiation component because it is more than half of column physics. It took 4 months to port just the solar radiation sub-component of radiation over to GMAO’s 4-GPU cluster, in part due to then-immature programming tools. SIVO ASTG’s Hamid Oloso recalled similar experiences with some GEOS-5 components in a summer 2010 GPU study. The situation has improved with more robust versions of NVIDIA’s CUDA C software development toolkits and the Portland Group, Inc.’s (PGI) CUDA Fortran Compiler; the latter is key for GEOS-5, which is primarily written in Fortran. Typical component porting times are now 2 weeks to 1 month.

Once ported, GEOS-5 full component performance on a GPU has varied from no speedup to 20x speedup versus a conventional CPU core:

|

GEOS-5 Component |

Port Organization |

Speedup |

CPU Core |

|

Radiation |

GMAO |

20x |

Intel Xeon 5600 “Westmere” |

|

Solar Radiation |

GMAO SIVO |

45x 48x |

Intel Xeon 5600 “Westmere” Intel Xeon 5500 “Nehalem” |

|

Infrared Radiation |

GMAO |

30x |

Intel Xeon 5600 “Westmere” |

|

Cloud |

GMAO |

19x |

Intel Xeon 5600 “Westmere” |

|

Gravity Wave Drag |

GMAO |

16x |

Intel Xeon 5600 “Westmere” |

|

Turbulence |

GMAO |

3x |

Intel Xeon 5600 “Westmere” |

|

Chemistry |

SIVO |

1x |

Intel Xeon 5500 “Nehalem” |

|

Dynamics |

SIVO |

2x–3x |

Intel Xeon 5500 “Nehalem” |

The quoted component speedups are from runs of the full GEOS-5 model. Limited by the small number of GPUs, GEOS-5 is typically using grid boxes 222 kilometers wide. At that coarse resolution, column physics takes up 75 percent of the run time. GMAO has been experimenting with high-resolution runs of GEOS-5, with each doubling of resolution requiring 10 times more computing power. When model resolution reaches 10 kilometers, dynamics takes up 90 percent of the run time. In a preliminary demonstration, GMAO research meteorologist Bill Putman ported the advection core of dynamics to a GPU. He saw speedups of 70x to 80x versus an Intel Xeon 5500 “Nehalem” core when running the advection core by itself. GMAO and SIVO estimate that GEOS-5 overall could realize a 2x to 4x improvement in performance by using GPUs.

Additional Models

While GMAO continues their porting efforts, SIVO will be researching how GPUs might benefit other NASA Earth science models. One effort will investigate different GPU programming paradigms for the Goddard Institute for Space Studies (GISS) ModelE climate model. Another will apply CUDA to a Goddard snowflake formation model called Snowfake. SIVO and NCCS personnel also will serve as consultants for the NCCS GPU cluster.

Across Goddard’s campus, one group that will soon access that cluster is the Geospace Physics Laboratory. Space scientist John Dorelli and collaborators are running a global model of the magnetosphere—the interaction between the solar wind and Earth’s magnetic field. Rather than adapt an existing software code, “we decided that it was worth it to start with a clean slate to take advantage of all the opportunities for optimization made possible by the GPU hardware,” Dorelli said. A scaled down version of the model written in CUDA C performed 58 times faster on one of their in-house GPUs compared to a CPU. Envisioning speedups of 100x, “the hope is to develop a global magnetosphere code capable of performing at very high resolution in near real time for space weather applications,” Dorelli said.

Such large potential performance gains come with the added benefit of GPUs using considerably less electricity than CPUs. Reduced operational costs open the door to building more powerful computing systems sooner than conventional approaches allow. If application testing leads to NCCS deploying hundreds of GPUs, this technology may accelerate not only the simulation codes but also NASA’s ability to tackle science problems that are impossible on today’s supercomputers.