The NVIDIA® CUDA® Toolkit provides a comprehensive development environment for C and C++ developers building GPU-accelerated applications. The CUDA Toolkit includes a compiler for NVIDIA GPUs, math libraries, and tools for debugging and optimizing the performance of your applications. Additionally CUDA 5 introduces several new tools and features that make it easier than ever to add GPU acceleration to your applications.

New Nsight, Eclipse Edition helps you explore the power of GPU computing with the productivity of Eclipse on Linux and MacOS

• Develop, debug, and profile your GPU application all within a familiar Eclipse-based IDE

• Integrated expert analysis system provides automated performance analysis

and step-by-step guidance to fix performance bottlenecks in the code

• Easily port CPU loops to CUDA kernels with automatic code refactoring

• Semantic highlighting of CUDA code makes it easy to differentiate GPU Code from

CPU code

• Integrated CUDA code samples makes it quick and easy to get started

• Generate code faster with CUDA aware auto code completion and inline help

GPU callable libraries now possible with GPU Library Object Linking

• Compile independent sources to GPU object files and link together into a larger

application

• Design plug-in APIs that allow developers to extend the functionality of your kernels

• Efficient and familiar process for developing large GPU applications

• Enables 3rd party ecosystem for GPU callable libraries

GPUDirect RDMA provides fastest possible communication between GPUs and other PCI-E devices

• Direct memory access (DMA) supported between NIC and GPU without the need for

CPU-side data buffering

• Significantly improved MPISendRecv efficiency between GPU and other nodes in a

network

• Eliminates CPU bandwidth and latency bottlenecks

• Works with variety of 3rd party network and storage devices

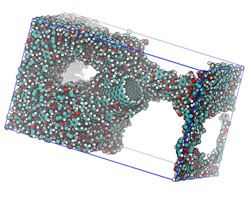

Dynamic Parallelism enables programmers to easily accelerate parallel nested loops on the new Kepler GK110 GPUs

• Developers can easily spawn new parallel work from within GPU code

• Minimizes the back and forth between the CPU and GPU

• Enables GPU acceleration for a broader set of popular algorithms, including adaptive

mesh refinement used in aerospace and automotive computational fluid

dynamics (CFD) simulations

• Supported natively on Kepler II architecture GPUs, preview programming guide and

whitepaper available today