Graphic Processor Units for Many-particle Dynamics

.

- HomeReturn Home

- AboutWhat Do We Do

- HistoryOur Development Story

- PublicationsOur Scientific Published Works

- Our PeopleOur Development Team

- NewsRecent Activities

- GallerySamples of our work

- OtherHelpful Files

- Details

- Details

World's Top Design & Manufacturing Companies Cut Engineering Simulation Times in Half Using GPU-Accelerated Abaqus FEA

Two of the largest automakers in Europe evaluated Abaqus FEA to analyze the structural behavior of large engine models. In each case their NVIDIA GPU-accelerated simulations were completed in half the time it would have taken using CPUs alone. For the automotive industry, this acceleration enables CAE engineers to perform more analyses in a given period of time, identifying issues earlier and decreasing time to market.

- Details

Atlanta, GA, Jun 1, 2011 - AccelerEyes today released version 1.0 of libJacket enabling GPU programmers to achieve better GPU performance with less programming hassle. It builds upon the big success of Jacket for MATLAB®, bringing that popular runtime system and vast GPU function library to new programming languages.

- Details

Whether racing to model fast-moving financial markets, exploring mountains of geological data, or researching solutions to complex scientific problems, you need a computing platform that delivers the highest throughput and lowest latency possible. GPU-accelerated clusters and workstations are widely recognized for providing the tremendous horsepower required to perform compute-intensive workloads, and your applications can achieve even faster results with NVIDIA GPUDirect™.

- Details

Read more: NVIDIA ARMs Itself for Heterogeneous Computing...

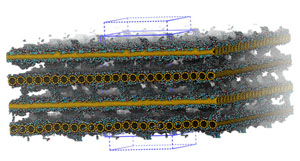

Random Images

Login Form

Visitors Counter

© 2009-2026 by GPIUTMD

We have 17 guests and no members online

Latest News

$150,000 AWARD FOR RESEARCHERS, UNIVERSITIES WORLDWIDE

NVIDIA is calling on global researchers to submit their innovations for the NVIDIA Global Impact Award - an annual grant of $150,000 for groundbreaking work that addresses the world's most important social and humanitarian problems.

Read more ...Unified Memory in CUDA 6

With CUDA 6, we’re introducing one of the most dramatic programming model improvements in the history of the CUDA platform, Unified Memory. In a typical PC or cluster node today, the memories of the CPU and GPU are physically distinct and separated by the PCI-Express bus. Before CUDA 6, that is exactly how the programmer has to view things. Data that is shared between the CPU and GPU must be allocated in both memories, and explicitly copied between them by the program. This adds a lot of complexity to CUDA programs.

Read more ...My Apple Style Countdown

© 2009-2015 by GPIUTMD

Word Cloud

![]() Copyright © 2014. GPIUTMD - Graphic Processor Units for Many-particle Dynamics

Copyright © 2014. GPIUTMD - Graphic Processor Units for Many-particle Dynamics

Isfahan University of Technology

Address: Esteghlal Sq., Isfahan, Iran

Postal Code: 8415683111

Phone: +98 311 391 3110

Fax: +98 311 391 2718